In building your Python application and its dependencies for production, you want to make sure that your builds are predictable and deterministic. Therefore, always pin your dependencies.

Update: A newer blog post about the future of pip-tools is available too: Better Package Management.

Pin Explicitly ¶

Don’t ever use these styles in requirements.txt:

lxmllxml>=2.2.0lxml>=2.2.0,<2.3.0

Instead, pin them:

lxml==2.3.4

If you don’t, you can never know what you’ll get when you run pip install.

Even if you rebuild the env every time, you still can’t predict it. The outcome

relies on a) what’s currently installed, and b) what’s the current version on

PyPI.

Eventually, all of your environments, and those of your team members, will run

out of sync. Worse even, this cannot be fixed by rerunning pip install. It’s

just waiting for bad things to happen in production.

The only way of making your builds deterministic, is if you pin every single package dependency (even the dependency’s dependencies).

WARNING: Don’t pin by default when you’re building libraries! Only use pinning for end products.

The biggest complaint from folks regarding explicit pinning is that you won’t benefit from updates that way. Well, yes, you won’t. But think of it. It’s impossible to distinguish between a new release that fixes bugs, or one that introduced them. You are leaving it up to coincidence. There is only one way to retake control: pin every dependency.

Check for Updates Automatically ¶

So: we want to pin packages, but don’t want to let them become outdated. The solution: use a tool that can check for updates. This is exactly what I built pip-tools for.

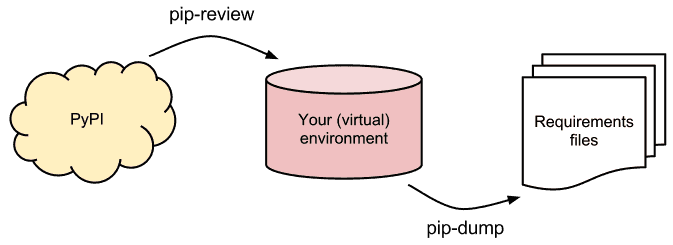

pip-tools is the collective name for two tools: pip-review + pip-dump

pip-review ¶

It will check for available updates of all packages currently installed in your environment, and report about them when available:

$ pip-review requests==0.14.0 available (you have 0.13.2) redis==2.6.2 available (you have 2.4.9) rq==0.3.2 available (you have 0.3.0)

You can also install them automatically:

$ pip-review --auto ...

or interactively decide whether you want to install each package:

$ pip-review --interactive requests==0.14.0 available (you have 0.13.2) Upgrade now? [Y]es, [N]o, [A]ll, [Q]uit y ... redis==2.6.2 available (you have 2.4.9) Upgrade now? [Y]es, [N]o, [A]ll, [Q]uit n rq==0.3.2 available (you have 0.3.0) Upgrade now? [Y]es, [N]o, [A]ll, [Q]uit y ...

It’s advisable to pick a fixed schedule to run pip-review. For example, every

monday during a weekly standup meeting with your engineering team. Make it

a point on the agenda. You discuss pip-review’s output, inspect changelogs,

or just blindly upgrade them. The important part is that you do it explicitly.

You have the chance to run with the upgraded versions for a while in

a development environment, before pushing those versions to production.

pip-dump ¶

Whereas pip-review solves the problem of how to check for updates of pinned

packages, pip-dump focuses on the problem of how to dump those definitions

into requirements files, managed under version control.

Typically, in Python apps, you include a requirements.txt file in the root of

your project directory, and you run pip freeze > requirements.txt

periodically. While this works for simple projects, this doesn’t scale. Some

packages are installed for development, or personal, purposes only and you

don’t want to include those in requirements.txt, going to production, or

visible to your other team members.

pip-dump provides a smarter way to dump requirements. It understands the

convention of separating requirements into multiple files, following the naming

convention:

requirements.txtis the main (and default) requirements file;dev-requirements.txt, ortest-requirements.txt, or actually,*requirements.txt, are secundary dependencies.

When you have a requirements.txt and dev-requirements.txt file in your

project, with the following content:

# requirements.txt Flask==0.9 # dev-requirements.txt ipython

Then simply running pip-dump will result in the following output:

# requirements.txt Flask==0.9 Jinja2==2.6 Werkzeug==0.8.3 # dev-requirements.txt ipython==0.13

It keeps the files sorted for tidiness, and to reduce the chance of merge conflicts in version control.

You can even put packages in an optional file called .pipignore. This is

useful if you want to keep some packages installed in your local environment,

but don’t want to have them reflected in your requirements files.

Contributing ¶

pip-tools 0.x is relied on by many already on a daily/weekly basis. It’s

worth noting that we’re working on Better Package Management too,

which will be the future of pip-tools. If you want to contribute, please

shout out.